Numbers are powerful, statistics are powerful, but they must be used correctly and responsibly. Leaders need to use data to help take decisions and measure progress, but leaders also need to make sure that they know where limitations creep into data, particularly when it's processed into summary figures.

This links quite closely to this post by David Didau (@Learningspy) where he discusses availability bias - i.e. being biased because you're using the data that is available rather than thinking about it more deeply.

As part of this there is an important misuse of percentages that as a maths teacher I feel the need to highlight... basically when you turn raw numbers into percentages it can add weight to them, but sometimes this weight is undeserved...

Percentages can end up being discrete measures dressed up as continuous

Quick reminder of GCSE data types - Discrete data is in chunks, it can't take values between particular points. Classic examples might be shoe sizes where there is no measure between size 9 or size 10, or favourite flavours of crisps where there is no mid point between Cheese & Onion or Smoky Bacon.

Continuous data can have sub divisions inserted between them, for example a measure of height could be in metres, centimetres, millimetres and so on - it can keep on being divided.

The problem with percentages is that they look continuous - you can quote 27%, 34.5%, 93.2453%. However the data used to calculate the percentage actually imposes discrete limits to the possible outcome. A sample of 1 can only have a result of 0% or 100%, a sample of 2 can only result in 0%, 50% or 100%, 3 can only give 0%, 33.3%, 66.7% or 100%, and so on. Even with 200 data points you can only have 201 separate percentage value outputs - it's not really continuous unless you get to massive samples.

It LOOKS continuous and is talked about like a continuous measure, but it is actually often discrete and determined by the sample that you are working with.

Percentages as discrete data makes setting targets difficult for small groups

Picture a school that sets an overall target that at least 80% of students in a particular category (receipt of pupil premium, SEN needs, whatever else) are expected to meet or exceed expected progress.

In this hypothetical school there are three equivalent classes, let's call them A, B and C. In class A we can calculate that 50% of these students are making expected progress; in class B it's 100%, and in class C it's 0%. On face value Class A is 30% behind target, B is 20% ahead and C is 80% behind, but that's completely misleading...

Class A has two students in this category, one is making expected progress, the other isn't. As such it's impossible to meet the 80% target in this class - the only options are 0%, 50% or 100%. If the whole school target at 80% accepts that some students may not reach expected progress then by definition you have to accept that 50% might be on target for this specific class. You might argue that 80% is closer to 100% so that should be the target for this class, but that means that this teacher as to achieve 100% where the whole school is only aiming at 80%! The school has room for error but this class doesn't! To suggest that this teacher is underperforming because they haven't hit 100% is unfair. Here the percentage has completely confused the issue, when what's really important is whether these 2 individuals are learning as well as they can?

Class B and C might each have only one student in this category. But it doesn't mean that the teacher of class B is better than that of class C. In class B the student's category happens to have no significant impact on their learning in that subject, they progress alongside the rest of the class with no issues, with no specific extra input from the teacher. In class C the student is also a young carer and misses extended periods from school; when present they work well but there are gaps in their knowledge due to absences that even the best teacher will struggle to fill. To suggest that either teacher is more successful than the other on the basis of this data is completely misleading as the detailed status of individual students is far more significant.

What this is intended to illustrate is that taking a target for a large population of students and applying it to much smaller subsets can cause real issues. Maybe the 80% works at a whole school level, but surely it makes much more sense at a class level to talk about the individual students rather than reducing them to a misleading percentage?

Percentage amplifies small populations into large ones

Simply because percent means "per hundred" we start to picture large numbers. When we state that 67% of books reviewed have been marked in the last two weeks it conjures up images of 67 books out of 100. However that statistic could have been arrived at having only reviewed 3 books, 2 of which had been marked recently. The percentage give no indication of the true sample size, and therefore 67% could hide the fact that the next step better could be 100%!

If the following month the same measure is quoted as having jumped to 75% it looks like a big improvement, but it could simply be 9 out of 12 this time, compared to 8 out of 12 the previous month. Arithmetically the percentages are correct (given rounding), but the apparent step change from 67% to 75% is actually far less impressive when described as 8/12 vs 9/12. As a percentage it suggests a big move in the population; as a fraction it means only one more meeting the measure.

You can get a similar issue if a school is grading lessons/teaching and reports 72% good or better in one round of reviews, and then sees 84% in the next. (Many schools are still doing this type of grading and summary, I'm not going to debate the rights and wrongs here - there are other places for that). However the 72% is the result of 18 good or better out of 25 seen, the 84% is the result of 21 out of 25. So the 12% point jump is due to just 3 teachers flipping from one grade to the next.

Basically when your population is below 100 an individual piece of data is worth more than 1% and it's vital not to forget this. Quoting a small population as a percentage amplifies any apparent changes, and this effect increases as the population size shrinks. The smaller your population the bigger the amplification. So with a small population a positive change looks more positive as a percentage, and a negative change looks more negative as a percentage.

Being able to calculate a percentage doesn't mean you should

I guess to some extent I'm talking about an aspect of numeracy that gets overlooked. The view could be that if you know the arithmetic method for calculating a percentage then so long as you do that calculation correctly then the numbers are right. Logic follows that if the numbers are right then any decisions based on them must be right too. But this doesn't work.

The numbers might be correct but the decision may be flawed. Comparing this to a literacy example might help. I can write a sentence that is correct grammatically, but that does not mean the sentence must be true. The words can be spelled correctly, in the correct order and punctuation might be flawless. However the meaning of the sentence could be completely incorrect. (I appreciate that there might be some irony in that I may have made unwitting errors in this sentence about grammar - corrections welcome!)

For percentage calculations then the numbers may well be correct arithmetically but we always need to check the nature of the data that was used to generate these numbers and be aware of the limitations to the data. Taking decisions while ignoring these limitations significantly harms the quality of the decision.

Other sources of confusion

None of the above deals with variability or reliability in the measures used as part of your sample, but that's important too. If your survey of books could have given a slightly different result if you'd chosen different books, different students or different teachers then there is an inherent lack of repeatability to the data. If you're reporting a change between two tests then anything within test to test variation simply can't be assumed to be a real difference. Apparent movements of 50% or more could be statistically insignificant if the process used to collect the data is unreliable. Again the numbers may be arithmetically sound, but the statistical conclusion may not be.

Draw conclusions with caution

So what I'm really trying to say is that the next time someone starts talking about percentages try to look past the data and make sure that it makes sense to summarise it as a percentage. Make sure you understand what discrete limitations the population size has imposed, and try to get a feel for how sensitive the percentage figures are to small changes in the results.

By all means use percentages, but use them consciously with knowledge of their limitations.

As always - all thoughts/comments welcome...

Saturday, 14 June 2014

Saturday, 7 June 2014

RAG123 is not the same as traffic lights

I've written regularly about RAG123 since November 2013 and since starting it as an initial trial in November I still view it as the single most important thing I've discovered as a teacher. It's now absolutely central to my teaching practice, but I do fear that at times people misunderstand what RAG123 is all about. They see the colours and they decide it is just another version of traffic lighting or thumbs up/across/down AFL. I'm sure it gets dismissed as "lazy marking", but the reality is that it is much, much more than marking.

As an example of this uncertainty of RAG123 at a surface level without really understanding the depth I was recently directed to the Ofsted document "Mathematics made to measure" found here. I'd read this document some time ago and it is certainly a worthwhile read for anyone in a maths department, particularly leading/managing the subject, but it may well provide useful thoughts to those with other specialisms. There is a section (paragraphs 88-99) that are presented under the subheading "Marking: the importance of getting it right" - it was suggested to me that RAG123 fell foul of the good practice recommended in these paragraphs, even explicitly criticised as traffic lighting and as such isn't a good approach to follow.

Having read the document again I actually see RAG123 as fully in line with the recommendations of good practice in the Ofsted document and I'd like to try and explain why....

The paragraphs below (incl paragraph numbers) are cut & pasted directly from the Ofsted document (italics), my responses are shown in bold:

88. Inconsistency in the quality, frequency and usefulness of teachers’ marking is a

perennial concern. The best marking noted during the survey gave pupils

insight into their errors, distinguishing between slips and misunderstanding, and

pupils took notice of and learnt from the feedback. Where work was all correct,

a further question or challenge was occasionally presented and, in the best

examples, this developed into a dialogue between teacher and pupil.

RAG123 gives a consistent quality, and frequency to marking. Errors and misunderstandings seen in a RAG123 review can be addressed either in marking or through adjustments to the planning for the next lesson. The speed of turnaround between work done, marking done/feedback given, pupil response, follow up review by teacher means that real dialogue can happen in marking.

89. More commonly, comments written in pupils’ books by teachers related either

to the quantity of work completed or its presentation. Too little marking

indicated the way forward or provided useful pointers for improvement. The

weakest practice was generally in secondary schools where cursory ticks on

most pages showed that the work had been seen by the teacher. This was

occasionally in line with a department’s marking policy, but it implied that work

was correct when that was not always the case. In some instances, pupils’

classwork was never marked or checked by the teacher. As a result, pupils can

develop very bad habits of presentation and be unclear about which work is

correct.

With RAG123 ALL work is seen by the teacher - there is no space for bad habits to develop or persist. While it can be that the effort grading could be linked to quantity or presentation it should also be shaped by the effort that the teacher observed in the lesson. Written comments/corrections may not be present in all books but corrections can be applied in the next lesson without the need for the teacher to write loads down. This can be achieved in various ways, from 1:1 discussion to changing the whole lesson plan.

90. A similar concern emerged around the frequent use of online software which

requires pupils to input answers only. Although teachers were able to keep

track of classwork and homework completed and had information about

stronger and weaker areas of pupils’ work, no attention was given to how well

the work was set out, or whether correct methods and notation were used.

Irrelevant to RAG123

91. Teachers may have 30 or more sets of homework to mark, so looking at the

detail and writing helpful comments or pointers for the way forward is time

consuming. However, the most valuable marking enables pupils to overcome

errors or difficulties, and deepen their understanding.

Combining RAG123 with targeted follow up/DIRT does exactly this in an efficient way.

As an example of this uncertainty of RAG123 at a surface level without really understanding the depth I was recently directed to the Ofsted document "Mathematics made to measure" found here. I'd read this document some time ago and it is certainly a worthwhile read for anyone in a maths department, particularly leading/managing the subject, but it may well provide useful thoughts to those with other specialisms. There is a section (paragraphs 88-99) that are presented under the subheading "Marking: the importance of getting it right" - it was suggested to me that RAG123 fell foul of the good practice recommended in these paragraphs, even explicitly criticised as traffic lighting and as such isn't a good approach to follow.

Having read the document again I actually see RAG123 as fully in line with the recommendations of good practice in the Ofsted document and I'd like to try and explain why....

The paragraphs below (incl paragraph numbers) are cut & pasted directly from the Ofsted document (italics), my responses are shown in bold:

88. Inconsistency in the quality, frequency and usefulness of teachers’ marking is a

perennial concern. The best marking noted during the survey gave pupils

insight into their errors, distinguishing between slips and misunderstanding, and

pupils took notice of and learnt from the feedback. Where work was all correct,

a further question or challenge was occasionally presented and, in the best

examples, this developed into a dialogue between teacher and pupil.

RAG123 gives a consistent quality, and frequency to marking. Errors and misunderstandings seen in a RAG123 review can be addressed either in marking or through adjustments to the planning for the next lesson. The speed of turnaround between work done, marking done/feedback given, pupil response, follow up review by teacher means that real dialogue can happen in marking.

89. More commonly, comments written in pupils’ books by teachers related either

to the quantity of work completed or its presentation. Too little marking

indicated the way forward or provided useful pointers for improvement. The

weakest practice was generally in secondary schools where cursory ticks on

most pages showed that the work had been seen by the teacher. This was

occasionally in line with a department’s marking policy, but it implied that work

was correct when that was not always the case. In some instances, pupils’

classwork was never marked or checked by the teacher. As a result, pupils can

develop very bad habits of presentation and be unclear about which work is

correct.

With RAG123 ALL work is seen by the teacher - there is no space for bad habits to develop or persist. While it can be that the effort grading could be linked to quantity or presentation it should also be shaped by the effort that the teacher observed in the lesson. Written comments/corrections may not be present in all books but corrections can be applied in the next lesson without the need for the teacher to write loads down. This can be achieved in various ways, from 1:1 discussion to changing the whole lesson plan.

90. A similar concern emerged around the frequent use of online software which

requires pupils to input answers only. Although teachers were able to keep

track of classwork and homework completed and had information about

stronger and weaker areas of pupils’ work, no attention was given to how well

the work was set out, or whether correct methods and notation were used.

Irrelevant to RAG123

91. Teachers may have 30 or more sets of homework to mark, so looking at the

detail and writing helpful comments or pointers for the way forward is time

consuming. However, the most valuable marking enables pupils to overcome

errors or difficulties, and deepen their understanding.

Combining RAG123 with targeted follow up/DIRT does exactly this in an efficient way.

Paragraphs 92 & 93 simply refer to examples given in the report and aren't relevant here.

94. Some marking did not distinguish between types of errors and, occasionally,

correct work was marked as wrong.

Always a risk in all marking, RAG123 is not immune, but neither is any other marking. However given that RAG123 only focuses on a single lesson's work the quantity is smaller so there is a greater change that variations in student's work will be seen and addressed.

95. At other times, teachers gave insufficient attention to correcting pupils’

mathematical presentation, for instance, when 6 ÷ 54 was written incorrectly

instead of 54 ÷ 6, or the incorrect use of the equals sign in the solution of an

equation.

Again a risk in all marking and RAG123 is not immune, but it does give the opportunity for frequent and repeated corrections/highlighting of these errors so that they don't become habits.

96. Most marking by pupils of their own work was done when the teacher read out

the answers to exercises or took answers from other members of the class.

Sometimes, pupils were expected to check their answers against those in the

back of the text book. In each of these circumstances, attention was rarely paid

to the source of any errors, for example when a pupil made a sign error while

expanding brackets and another omitted to write down the ‘0’ place holder in a

long multiplication calculation. When classwork was not marked by the teacher

or pupil, mistakes were unnoticed.

With RAG123 ALL work is seen by the teacher - they can look at incorrect work and determine what the error was, either addressing it directly with the student or if it is widespread taking action at whole class level.

97. The involvement of pupils in self-assessment was a strong feature of the most

effective assessment practice. For instance, in one school, Year 4 pupils

completed their self-assessments using ‘I can …’ statements and selected their

own curricular targets such as ‘add and subtract two-digit numbers mentally’

and ‘solve 1 and 2 step problems’. Subsequent work provided opportunities for

pupils to work on these aspects.

The best use of RAG123 asks students to self assess with a reason for their rating. Teachers can review/respond and shape these self assessments in a very dynamic way due to the speed of turnaround. It also gives a direct chance to follow up by linking to DIRT

98. An unhelpful reliance on self-assessment of learning by pupils was prevalent in

some of the schools. In plenary sessions at the end of lessons, teachers

typically revisited the learning objectives, and asked pupils to assess their own

understanding, often through ‘thumbs’, ‘smiley faces’ or traffic lights. However,

such assessment was often superficial and may be unreliable.

Assessment of EFFORT as well as understanding in RAG123 is very different to these single dimension assessments. I agree that sometimes the understanding bit is unreliable. However with RAG123 the teacher reviews and changes the pupil's RAG123 rating based on the work done/seen in class. As such it becomes more accurate once reviewed. Also the reliability is often improved by by asking students to explain why they deserve that rating. The effort bit is vital though... If a student is trying as hard as they can (G) then it is the teacher's responsibility to ensure that they gain understanding. If a student is only partially trying (A) then the teacher's impact will be limited. If a student is not trying at all (R) then even the most awesome teacher will not be able to improve their understanding. By highlighting and taking action on the effort side it emphasises the student's key input to the learning process. While traffic lights may very well be ineffective as a single shot self assessment of understanding, when used as a metaphor for likely progress given RAG effort levels then Green certainly is Go, and Red certainly is stop.

99. Rather than asking pupils at the end of the lesson to indicate how well they had

met learning objectives, some effective teachers set a problem which would

confirm pupils’ learning if solved correctly or pick up any remaining lack of

understanding. One teacher, having discussed briefly what had been learnt with

the class, gave each pupil a couple of questions on pre-prepared cards. She

took the cards in as the pupils left the room and used their answers to inform

the next day’s lesson planning. Very occasionally, a teacher used the plenary

imaginatively to set a challenging problem with the intention that pupils should

think about it ready for the start of new learning in the next lesson.

This is an aspect of good practice that can be applied completely alongside RAG123, in fact the "use to inform the next day's lesson planning" is something that is baked in with daily RAG123 - by knowing exactly the written output from one lesson you are MUCH more likely to take account of it in the next one.

So there you have it - I see RAG123 as entirely in line with all the aspects of best practice identified here. Don't let the traffic light wording confuse you - RAG123 as deployed properly isn't anything like a single dimension traffic light self assessment - it just might share the colours. If you don't like the colours and can't get past that bit then define it as ABC123 instead - it'll still be just as effective and it'll still be the best thing you've done in teaching!

All comments welcome as ever!

Reflecting on reflections

Reflecting is hard, really hard! It requires an honesty with yourself, an ability to take a step back from what you've done (that you have a personal attachment to) and to think deeply about how successful you've been. Ideally it should also involve some diagnosis on why you have/haven't been successful, and what you might do differently the next time you face a similar situation.

Good reflection is really high order thinking

If you consider where the skills required or the type of thinking for reflection lie in Bloom's taxonomy then it's the top end, high order thinking. You have to analyse and evaluate your performance, and then create ideas on how to improve.

Some people don't particularly like Blooms and might want to lob rocks at anything that refers to it. If you'd prefer to use an alternative taxonomy like SOLO (see here) then we're still talking the higher end Relational and Extended Abstract type of thinking. Anyone involved in reflection needs to make links between various areas of understanding, and ideally extend this into a what if situation for the future. Basically use whatever taxonomy of thinking you like and reflection/metacognition is right at the top in terms of difficulty.

The reason I am talking about this is that one of the things I keep seeing on twitter and also in observation feedback, work scrutiny evaluations and so on or are comments about poor quality self assessment & reflections from students.

Sometimes this is a teacher getting frustrated when students asked to reflect just end up writing comments like "I understood it," "I didn't get it" or "I did ok." Other times it is someone reviewing books that might suggest that the student's reflections don't indicate that they know what they need to do to improve.

It often crops up, and one of the ways I most often hear about it is when someone is first trying out RAG123 marking (Not heard of RAG123? - see here, here and then any of these). This structure for marking gives so many opportunities for self assessment and dialogue that the teacher sees lots of relatively poor reflective comments in one go and finds it frustrating.

Now having thought about the type of thinking required for good reflection is it a real surprise that a lot of students struggle? To ask a group to reflect is pushing them to a really high level of thought. Asking the question is completely valid, it's good to pose high order questions, but we really shouldn't be surprised if we get low order answers even from very able students, and particularly from weaker students. Some may not yet have the cognitive capacity to make a high quality response, for others it might be a straight vocabulary/literacy issue - students can't talk about something coherently unless they have the appropriate words at their disposal.

Is it just students?

The truth is that many adults struggle to reflect well. Some people struggle to see how good things actually were because they get hung up on the bad things. Others struggle to see the bad bits because they are distracted by the good bits. Even then many will struggle to do the diagnosis side and look for ways to improve. It's difficult to recognise flaws in yourself, and often even harder to come up with an alternative method that will improve things. If we all found it easy then the role of coaches and mentors would be redundant.

As part of thinking about how well our students are reflecting perhaps we should all take a little time to think about how good we are at reflecting on our own practice? How honest are we with ourselves? How objective are we? How constructive are we in terms of making and applying changes as a result of our reflections?

Don't stop just because it's difficult

Vitally just because students struggle to reflect in a coherent or high order way doesn't mean we should stop asking them to reflect. But we shouldn't be foolish enough to expect a spectacularly insightful self assessment from students the first time they try it. As with any cognitive process we should give them support to help them to structure their reflections. This support is the same kind of scaffolding that may be needed for any other learning:

Model it: Show them some examples of good reflection. Perhaps even demonstrate it in front of the class by reflecting on the lesson you've just taught?

Give a foothold: Sentences are easier to finish than to start - perhaps give them a sentence starter, or a choice of sentence starters - the improvement in quality is massive (See this post for some ideas on this)

Give feedback on the reflections: As part of responding to the reflections in marking dialogue give guidance on how they could improve their reflections and not just their work.

Give time for them to improve: A given group of students that have never self assessed before shouldn't be expected to do it perfectly, but we should expect them to get better at it given time and guidance.

As ever I'd be keen to know your thoughts, your experiences and if you've got any other suggestions....

Good reflection is really high order thinking

If you consider where the skills required or the type of thinking for reflection lie in Bloom's taxonomy then it's the top end, high order thinking. You have to analyse and evaluate your performance, and then create ideas on how to improve.

|

| Picture from http://en.wikipedia.org/wiki/Bloom's_taxonomy |

The reason I am talking about this is that one of the things I keep seeing on twitter and also in observation feedback, work scrutiny evaluations and so on or are comments about poor quality self assessment & reflections from students.

Sometimes this is a teacher getting frustrated when students asked to reflect just end up writing comments like "I understood it," "I didn't get it" or "I did ok." Other times it is someone reviewing books that might suggest that the student's reflections don't indicate that they know what they need to do to improve.

It often crops up, and one of the ways I most often hear about it is when someone is first trying out RAG123 marking (Not heard of RAG123? - see here, here and then any of these). This structure for marking gives so many opportunities for self assessment and dialogue that the teacher sees lots of relatively poor reflective comments in one go and finds it frustrating.

Now having thought about the type of thinking required for good reflection is it a real surprise that a lot of students struggle? To ask a group to reflect is pushing them to a really high level of thought. Asking the question is completely valid, it's good to pose high order questions, but we really shouldn't be surprised if we get low order answers even from very able students, and particularly from weaker students. Some may not yet have the cognitive capacity to make a high quality response, for others it might be a straight vocabulary/literacy issue - students can't talk about something coherently unless they have the appropriate words at their disposal.

Is it just students?

The truth is that many adults struggle to reflect well. Some people struggle to see how good things actually were because they get hung up on the bad things. Others struggle to see the bad bits because they are distracted by the good bits. Even then many will struggle to do the diagnosis side and look for ways to improve. It's difficult to recognise flaws in yourself, and often even harder to come up with an alternative method that will improve things. If we all found it easy then the role of coaches and mentors would be redundant.

As part of thinking about how well our students are reflecting perhaps we should all take a little time to think about how good we are at reflecting on our own practice? How honest are we with ourselves? How objective are we? How constructive are we in terms of making and applying changes as a result of our reflections?

Don't stop just because it's difficult

Vitally just because students struggle to reflect in a coherent or high order way doesn't mean we should stop asking them to reflect. But we shouldn't be foolish enough to expect a spectacularly insightful self assessment from students the first time they try it. As with any cognitive process we should give them support to help them to structure their reflections. This support is the same kind of scaffolding that may be needed for any other learning:

Model it: Show them some examples of good reflection. Perhaps even demonstrate it in front of the class by reflecting on the lesson you've just taught?

Give a foothold: Sentences are easier to finish than to start - perhaps give them a sentence starter, or a choice of sentence starters - the improvement in quality is massive (See this post for some ideas on this)

Give feedback on the reflections: As part of responding to the reflections in marking dialogue give guidance on how they could improve their reflections and not just their work.

Give time for them to improve: A given group of students that have never self assessed before shouldn't be expected to do it perfectly, but we should expect them to get better at it given time and guidance.

As ever I'd be keen to know your thoughts, your experiences and if you've got any other suggestions....

Saturday, 10 May 2014

SOLO to open up closed questions

I've been dabbling with SOLO for a while now, it's been part of bits of my practice (see here, here and here) but I've yet to really embed it in all lessons as fully as I would have liked. I have used it as a problem solving tool, or to help structure revision, but not really deployed SOLO on a more day to day basis, and I want to change that.

I recently completed an interview for an Assistant Head position and as part of that was asked to teach a PSE lesson. This took me well out of my Maths comfort zone, so I had to give the planning deeper consideration than a maths lesson might have. After some thought I decided to introduce SOLO as part of the lesson, and it worked really well...

SOLO as a structure for discussion

I was teaching this PSE lesson to a group of year 7 students that I had never taught before and I knew that they had never seen SOLO before. As such a bit of my lesson needed to become an intro to SOLO. Fortunately the symbols are so intuitive that once I'd suggested that a single dot (Prestructural in SOLO terminology) meant you basically knew nothing about a topic, and a single bar (Unistructural) meant you knew something about it, the students were able to develop their own really good working definitions for Multistructural, Relational and Extended Abstract:

Once they had defined this hierarchy I could refer back to it at any point in the lesson and they knew what I was talking about. As such when I asked a question and the student responded with an answer I could categorise their response using the SOLO icons, such as "one bar," "three bar," "linked bar." If the student gave a "one bar" response I then asked them, or asked another student what was needed to make it a "three bar" response, and so on.

I was really pleased with how natural the discussion became, escalating up to really high level answers in a structured way. Similarly the students could use the same method with each other to improve their written answers through peer and self assessment. It even gives an easy way to open up a closed question question... For example:

Rightly or wrongly I have a feeling that the opportunity for this type of discussion is much more common in a subject like PSE, and the SOLO linkage is much clearer as a result, however it got me thinking about how this approach could be used in the same way for Maths...

SOLO vs closed questions

A constant battle for maths teachers is the old "there is only one right answer in maths." Now of course that may be true in terms of a numerical value, but that ignores the process followed to achieve that answer, and often there are many mathematically correct processes that lead to the same final answer. In more open ended activities there may also be multiple numerical answers that are "right."

In maths we constantly battle to get students to write down more than their final answer and to show their full method. Following my experience of using SOLO for PSE I started thinking about how to use it to break down the closed answers we encounter in maths. As such I've put this together as a starting point...

The pupil response could be something that is seen written down in their working, or something that they say verbally during discussion. The possible teacher response gives a suggestion of how to encourage a higher quality of response to this and future answers. This could be part of a RAG123 type marking (see here for more info on RAG123), verbal feedback, or any other feedback process.

An alternative is to use it for peer/self assessment, again to encourage progress from closed, factual answers, to fuller, clearer answers:

I realise I may be diluting or slightly misappropriating the SOLO symbols a little, e.g. is the top description above truly Extended Abstract or is it actually only Relational? In truth I don't think that distinction matters in this application - it's about enabling students to improve rather than assigning strict categories.

Proof in the pudding

The assessment ladder is part of a lesson plan for Tuesday, and I am going to try and use the pupil response grid throughout the week to help open up questions and encourage students to think more deeply about the answers - watch this space for updates.

As always - all thoughts & comments welcome.

I recently completed an interview for an Assistant Head position and as part of that was asked to teach a PSE lesson. This took me well out of my Maths comfort zone, so I had to give the planning deeper consideration than a maths lesson might have. After some thought I decided to introduce SOLO as part of the lesson, and it worked really well...

SOLO as a structure for discussion

I was teaching this PSE lesson to a group of year 7 students that I had never taught before and I knew that they had never seen SOLO before. As such a bit of my lesson needed to become an intro to SOLO. Fortunately the symbols are so intuitive that once I'd suggested that a single dot (Prestructural in SOLO terminology) meant you basically knew nothing about a topic, and a single bar (Unistructural) meant you knew something about it, the students were able to develop their own really good working definitions for Multistructural, Relational and Extended Abstract:

Once they had defined this hierarchy I could refer back to it at any point in the lesson and they knew what I was talking about. As such when I asked a question and the student responded with an answer I could categorise their response using the SOLO icons, such as "one bar," "three bar," "linked bar." If the student gave a "one bar" response I then asked them, or asked another student what was needed to make it a "three bar" response, and so on.

I was really pleased with how natural the discussion became, escalating up to really high level answers in a structured way. Similarly the students could use the same method with each other to improve their written answers through peer and self assessment. It even gives an easy way to open up a closed question question... For example:

T: "Name a famous leader"

P: "Nelson Mandela"

T: "What type of answer is that?"

P: "It's just a fact so it's got to be One bar"

T: "How could the answer be improved?"

P: "Give more facts about him, like that he led South Africa, or say why he was famous"

T: "Can you improve that further?"

P: "Maybe make links to other countries or compare him to other leaders"

T: "Fantastic, work on that with your partner..."

Rightly or wrongly I have a feeling that the opportunity for this type of discussion is much more common in a subject like PSE, and the SOLO linkage is much clearer as a result, however it got me thinking about how this approach could be used in the same way for Maths...

SOLO vs closed questions

A constant battle for maths teachers is the old "there is only one right answer in maths." Now of course that may be true in terms of a numerical value, but that ignores the process followed to achieve that answer, and often there are many mathematically correct processes that lead to the same final answer. In more open ended activities there may also be multiple numerical answers that are "right."

In maths we constantly battle to get students to write down more than their final answer and to show their full method. Following my experience of using SOLO for PSE I started thinking about how to use it to break down the closed answers we encounter in maths. As such I've put this together as a starting point...

The pupil response could be something that is seen written down in their working, or something that they say verbally during discussion. The possible teacher response gives a suggestion of how to encourage a higher quality of response to this and future answers. This could be part of a RAG123 type marking (see here for more info on RAG123), verbal feedback, or any other feedback process.

An alternative is to use it for peer/self assessment, again to encourage progress from closed, factual answers, to fuller, clearer answers:

I realise I may be diluting or slightly misappropriating the SOLO symbols a little, e.g. is the top description above truly Extended Abstract or is it actually only Relational? In truth I don't think that distinction matters in this application - it's about enabling students to improve rather than assigning strict categories.

Proof in the pudding

The assessment ladder is part of a lesson plan for Tuesday, and I am going to try and use the pupil response grid throughout the week to help open up questions and encourage students to think more deeply about the answers - watch this space for updates.

As always - all thoughts & comments welcome.

Saturday, 3 May 2014

Policies not straightjackets

I'm starting to lose track of the number of times I've heard or seen people say that they can't do or try something because it's out of line with their school or department policy. It really worries me when I hear that - it means they feel unable to innovate or experiment with something that could be an improvement.

Most often for me it's linked with RAG123, but I've seen it at other times in school, and all over the place on twitter too. It normally goes something like this:

More specific examples I've actually seen/heard over the years include:

A: "For that lesson why not try using a big open ended question as your learning objective that all students work towards answering?"

B: "I can't because we're required to have 'must, should, could' learning objectives for all lessons"

A: "Could you re-arrange the tables in you room to help establish control with that difficult group? Perhaps break up the desks to break up the talking groups?"

B: "No because our department policy says we have to have the tables in groups to encourage group work."

A: "Why not try RAG123 marking?"

B: "I can't because our marking policy requires written formative comments only."

What are policies for anyway?

Policies should be there to provide a framework of good basic practice that all in a given organisation can use as a bare minimum to baseline their practice. However there is a difference between a framework to guide and a set of rules to be applied rigidly.

For example a policy that says that learning objectives must include suitable differentiation for the class being taught is substantially different to saying that all lessons are required to have Must, Should, Could learning objectives. One is the essence of what we really want, the other is a single, rigid example of how this might be achieved. One allows the teacher to use their professional judgement to set objectives in a way that is appropriate for their relationship with that class and the material being taught; the other applies a blanket approach that assumes that every lesson by every teacher with every class is best set up in an identical fashion.

For me policies should set out a standard that is the bare minimum to ensure that the students get a good deal in that aspect. For example if a teacher is unsure of how often to mark their books the policy should clarify the minimum requirement, it should also detail what minimum information is needed in order for it to count as good marking.

However policies should never stifle innovation. Should never prevent the trial of something that could be even better. They also shouldn't dictate set structures that can't be deviated from under any circumstances - it should always be allowed to do it better than laid down in the policy!

Teachers as professionals should always have the option to deviate from the policy if it will produce better outcomes for their students in that particular situation (and if this becomes a consistent improvement then perhaps the policy should change to incorporate the deviation so that everyone benefits). However as professionals they should be both able and willing to justify a decision like this if questioned. Similarly if they have deviated from policy to try something that turns out to have not been so good then as professionals they should acknowledge this and return to the policy.

Consistency not uniformity

The bottom line is that policies should ensure a consistency in quality of experience, which mustn't be confused with a uniformity of experience. Quality in education is about high standards, high expectations and about professionals making informed decisions about how to get the best from the students in front of them. Quality is not about every teacher doing exactly the same thing in exactly the same way, if it was we could record model lessons and just play them to students, or just learn scripts to follow.

Uniformity and rigidity isn't the answer to the multi-faceted challenge that teaching presents; we can't always assume that one size fits all. Therefore policies should never be straightjackets. Policies should be guidelines and bare minimums, with innovation and improvement specifically allowed and encouraged.

Comments always welcome - I'd be interested to know your thoughts. :-)

Most often for me it's linked with RAG123, but I've seen it at other times in school, and all over the place on twitter too. It normally goes something like this:

- Person A: "Why not try this (insert suggested alternative pedagogical approach here)?"

- Person B: "That sounds great and I'd love to, but our policy for (same general area of pedagogy) means I can't try it."

More specific examples I've actually seen/heard over the years include:

A: "For that lesson why not try using a big open ended question as your learning objective that all students work towards answering?"

B: "I can't because we're required to have 'must, should, could' learning objectives for all lessons"

A: "Could you re-arrange the tables in you room to help establish control with that difficult group? Perhaps break up the desks to break up the talking groups?"

B: "No because our department policy says we have to have the tables in groups to encourage group work."

A: "Why not try RAG123 marking?"

B: "I can't because our marking policy requires written formative comments only."

What are policies for anyway?

Policies should be there to provide a framework of good basic practice that all in a given organisation can use as a bare minimum to baseline their practice. However there is a difference between a framework to guide and a set of rules to be applied rigidly.

For example a policy that says that learning objectives must include suitable differentiation for the class being taught is substantially different to saying that all lessons are required to have Must, Should, Could learning objectives. One is the essence of what we really want, the other is a single, rigid example of how this might be achieved. One allows the teacher to use their professional judgement to set objectives in a way that is appropriate for their relationship with that class and the material being taught; the other applies a blanket approach that assumes that every lesson by every teacher with every class is best set up in an identical fashion.

For me policies should set out a standard that is the bare minimum to ensure that the students get a good deal in that aspect. For example if a teacher is unsure of how often to mark their books the policy should clarify the minimum requirement, it should also detail what minimum information is needed in order for it to count as good marking.

However policies should never stifle innovation. Should never prevent the trial of something that could be even better. They also shouldn't dictate set structures that can't be deviated from under any circumstances - it should always be allowed to do it better than laid down in the policy!

Teachers as professionals should always have the option to deviate from the policy if it will produce better outcomes for their students in that particular situation (and if this becomes a consistent improvement then perhaps the policy should change to incorporate the deviation so that everyone benefits). However as professionals they should be both able and willing to justify a decision like this if questioned. Similarly if they have deviated from policy to try something that turns out to have not been so good then as professionals they should acknowledge this and return to the policy.

Consistency not uniformity

The bottom line is that policies should ensure a consistency in quality of experience, which mustn't be confused with a uniformity of experience. Quality in education is about high standards, high expectations and about professionals making informed decisions about how to get the best from the students in front of them. Quality is not about every teacher doing exactly the same thing in exactly the same way, if it was we could record model lessons and just play them to students, or just learn scripts to follow.

Uniformity and rigidity isn't the answer to the multi-faceted challenge that teaching presents; we can't always assume that one size fits all. Therefore policies should never be straightjackets. Policies should be guidelines and bare minimums, with innovation and improvement specifically allowed and encouraged.

Comments always welcome - I'd be interested to know your thoughts. :-)

Saturday, 26 April 2014

RAG123 user survey - the results!

I posted the RAG123 survey a few weeks ago and have now collected enough responses for it to be meaningful.

Don't know what RAG123 is? see here and here.

In total 40 people responded, which I know is fewer than the number that are actually using RAG123, but it represents those that saw the tweets about the survey and found the time to complete it - for which I am grateful as I know time is precious. 40 isn't a massive number, but it's enough to draw some conclusions on...

A quick health warning - these are the results of 40 responses - any statements made refer to the views of this sample only and shouldn't be extrapolated to wider populations. Also this was a USER survey - I've not got data from non-users, that wasn't part of the exercise. I'll also be clear that one of the responses is me - I'm a RAG123 user after all.

Profile of users

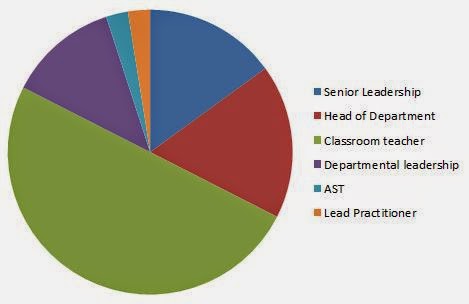

I was worried at one point that lots of people I communicate with about RAG123 appear to be departmental leadership of SLT. It made me wonder whether it is truly sustainable for a mainscale classroom teacher, however 50% of respondents are classroom teachers...

Don't know what RAG123 is? see here and here.

In total 40 people responded, which I know is fewer than the number that are actually using RAG123, but it represents those that saw the tweets about the survey and found the time to complete it - for which I am grateful as I know time is precious. 40 isn't a massive number, but it's enough to draw some conclusions on...

A quick health warning - these are the results of 40 responses - any statements made refer to the views of this sample only and shouldn't be extrapolated to wider populations. Also this was a USER survey - I've not got data from non-users, that wasn't part of the exercise. I'll also be clear that one of the responses is me - I'm a RAG123 user after all.

Profile of users

I was worried at one point that lots of people I communicate with about RAG123 appear to be departmental leadership of SLT. It made me wonder whether it is truly sustainable for a mainscale classroom teacher, however 50% of respondents are classroom teachers...

|

| Profile of RAG123 users by job function |

85% of respondents first heard about RAG123 via twitter, 5% heard from others in their school, and the final 5% are Rob Williams and I, who did the first trial at our school in November.

Subject coverage

The majority of respondents teach maths (60% if you include those who also teach another subject), and the next big group is science (25% if you include all who mention science). Humanities had 5% and the remaining few are individual teachers of other subjects.

|

| Respondent's subjects |

I wouldn't want anyone to draw a conclusion on this that RAG123 only really works for Maths and Science. Notably the two blogs/twitter feeds that have been pushing this idea are mine (Maths based) and Damian Benney's (here - Science based), as such it's hardly surprising that there is a bias here. What I am pleased by though is the fact that other subjects are represented, including the notable "Whole School." I do know from twitter exchanges that RAG123 has been used in MFL, Music, and a some other subjects too - it just happens that they didn't complete the survey.

Part of doing this survey was to collect some info on who was using RAG123 so we could share tips and best practice more directly. Users that included their twitter ID have been sorted by subject and can be found in these lists.

Impact on pupils

That's what we do it for after all!!

An overwhelming 82.5% report an improvement in either effort or attainment, or improvements in both. 10% are new RAG123 users and felt it was too soon to detect any changes, and the remaining 7.5% report no change on the part of the pupils.

|

| Reported impact on pupils following RAG123 introduction |

Impact on workload

One of the things I found when I started RAG123 was it improved my workload, I was interested to see what others thought...

|

| Impact RAG123 has had on perceived workload |

55% state their workload has decreased, 32.5% say there has been no change, and 12.5% state an increase.

Notably of those reporting increased workload all but one recognise improved pupil effort and attainment (the remaining one response is a "too soon to tell"). In the write in comments all of those with an increased workload are still very positive about RAG123. For example:

"Although I am not perfect at RAG123 and still have to do STAR, it has made marking so much quicker and actually I like doing it."

"My dept and I were sceptical and only did it cos u kept tweeting no negative feedback yet!!!! But we are sold!!"

In terms of where it has had biggest impact, 68% mention marking, 53% mention Dialogue and 60% mention planning (as these don't add to 100% you'll realise that many mention more than one of these!)

Best things about RAG123

This was a free text bit of the survey and the responses ranged from a few words to much more detailed. I could try to pick and choose best bits, but in all honesty it's best just to see the full text cut & pasted in here:

Picking up on misconceptions at source and the value pupils place on such regular marking. Also the way that informs your planning. Impossible to say just one.

Dialogue and relationships with students

Although my work load has increased as I am now taking books in every lesson for checking RAG123, it is a positive increase. I am able to judge how well my lesson has gone straight away. I can use the RAG123 to set targets more effectively and cater better for the individual. As a result DIRT happens every lesson now which I hope will pay off with regard to progress over time and stickability. My students are responding positively to me monitoring their progress so closely and a better dialogue has been established. If I find a smarter way of recording targets in exercise books so there is clear signposting of what is going on for observers, my workload should decrease in the future.

Informs future planning

The simplicity of it and the ease of use.

There are many best things, and the only downside is setting aside the time every lesson to make sure students do their part in it.

Marking is very quick. Pupils marking their own work a real game changer.

I know where everyone is after every lesson and can therefore plan for this in the next lesson. No one slips through the net; misconceptions identified readily (with more certainty than other AFL techniques) - there's nowhere to hide! No chance for bad habits to develop.

Communication with students

You can fully track progress of the whole class. I can identity misconceptions earlier and check students motivation.

Improved dialogue with students

Simple self assessment

It helps me identify what is not making a piece of work G1 and able to then identify where to improve.

Enables frequent marking and formative feedback.

Ability to plan effectively the next lesson and show progress.

quick whole class overview of progress and understanding

The students know that I am looking at the books very regularly and can write me messages that I will read.

I also get them to hand in their books in RAG123 piles so I can start with/spend more time on the students who need more help.

Students are getting used to assessing their own understanding, which I think will help with their revision.

I feel like I know my students better and what they have understood

Makes sure the kids complete their work - being able to keep on top of exactly what they are doing

No more marking guilt and amazing dialogue with students.

The ease, and the fact you know where the kids are after each lesson

quick feedback

Much more informed about planning. Kids love it and whilst I'm not sure any improved attainment is down to this I am convinced improved effort is. Combining it with pupils marking their own/each others work with green pens. We mark in red.

RAG123 is quick and extremely effective. I have mini dialogues with students in their books and can see patterns in behaviours as well and spot misconceptions quickly.

The best thing and what has helped me the most is being able to manage my marking load better. We have weekly book checks, one week yr7 and yr8 and the next week, yr 9 and 10. Before I was always in a mad panic about these. Now I know that although I may not be doing great at following the latest marking policy, my books are marked and feedback is there. (Especially those which I have still kept on top off!!) I know I'm not RAGging properly as I'm trying to squeeze too much feedback in, but it's much better than the paragraphs I wrote before!

planning for next lesson, allows me to monitor how the students are doing on a regular basis. They self-regulate their effort often.

student focus on their own progress and effort

Checking work after each lesson and before the next one!

The opportunity to have dialogue with pupils. They enjoy doing it too..

Pupils are excited to read and respond to my feedback each day. The impact it's having is worth the extra effort!

It's instant and instantly useful. Supports using LO/SC in all lessons.

I know how everyone is doing and what they need to do to improve or correct misunderstandings.

Quick, easy for both learner and teacher. gives you indication on how class doing, useful for ensuring tailor made lessons.

Regular monitoring, review link to lesson objectives, planning response better informed.

Simplicity, focus on student involvement and it's evidence based system.

The easiness of marking. It helps me to keep on top of it. I now feel a lot more knowledgable about all of my classes.

Dialogue and relationships with students

Although my work load has increased as I am now taking books in every lesson for checking RAG123, it is a positive increase. I am able to judge how well my lesson has gone straight away. I can use the RAG123 to set targets more effectively and cater better for the individual. As a result DIRT happens every lesson now which I hope will pay off with regard to progress over time and stickability. My students are responding positively to me monitoring their progress so closely and a better dialogue has been established. If I find a smarter way of recording targets in exercise books so there is clear signposting of what is going on for observers, my workload should decrease in the future.

Informs future planning

The simplicity of it and the ease of use.

There are many best things, and the only downside is setting aside the time every lesson to make sure students do their part in it.

Marking is very quick. Pupils marking their own work a real game changer.

I know where everyone is after every lesson and can therefore plan for this in the next lesson. No one slips through the net; misconceptions identified readily (with more certainty than other AFL techniques) - there's nowhere to hide! No chance for bad habits to develop.

Communication with students

You can fully track progress of the whole class. I can identity misconceptions earlier and check students motivation.

Improved dialogue with students

Simple self assessment

It helps me identify what is not making a piece of work G1 and able to then identify where to improve.

Enables frequent marking and formative feedback.

Ability to plan effectively the next lesson and show progress.

quick whole class overview of progress and understanding

The students know that I am looking at the books very regularly and can write me messages that I will read.

I also get them to hand in their books in RAG123 piles so I can start with/spend more time on the students who need more help.

Students are getting used to assessing their own understanding, which I think will help with their revision.

I feel like I know my students better and what they have understood

Makes sure the kids complete their work - being able to keep on top of exactly what they are doing

No more marking guilt and amazing dialogue with students.

The ease, and the fact you know where the kids are after each lesson

quick feedback

Much more informed about planning. Kids love it and whilst I'm not sure any improved attainment is down to this I am convinced improved effort is. Combining it with pupils marking their own/each others work with green pens. We mark in red.

RAG123 is quick and extremely effective. I have mini dialogues with students in their books and can see patterns in behaviours as well and spot misconceptions quickly.

The best thing and what has helped me the most is being able to manage my marking load better. We have weekly book checks, one week yr7 and yr8 and the next week, yr 9 and 10. Before I was always in a mad panic about these. Now I know that although I may not be doing great at following the latest marking policy, my books are marked and feedback is there. (Especially those which I have still kept on top off!!) I know I'm not RAGging properly as I'm trying to squeeze too much feedback in, but it's much better than the paragraphs I wrote before!

planning for next lesson, allows me to monitor how the students are doing on a regular basis. They self-regulate their effort often.

student focus on their own progress and effort

Checking work after each lesson and before the next one!

The opportunity to have dialogue with pupils. They enjoy doing it too..

Pupils are excited to read and respond to my feedback each day. The impact it's having is worth the extra effort!

It's instant and instantly useful. Supports using LO/SC in all lessons.

I know how everyone is doing and what they need to do to improve or correct misunderstandings.

Quick, easy for both learner and teacher. gives you indication on how class doing, useful for ensuring tailor made lessons.

Regular monitoring, review link to lesson objectives, planning response better informed.

Simplicity, focus on student involvement and it's evidence based system.

The easiness of marking. It helps me to keep on top of it. I now feel a lot more knowledgable about all of my classes.

The worst things about RAG123

For balance I also need to include all of the negatives - this is an unedited cut and paste of "the worst things" - I'll try to address some of the comments in another post:

It can be a pinch if you have parents' evening/meetings after school. Can be overcome though!!

That more people aren't using it!

Nothing! What I want to do is print the RAG123 criteria on a sticker and have it at the front of exercise books. That way I can then have the success criteria on the board linked to 123 which is something I have not yet been doing. I also need to get students to improve their justifications which will come once I have linked the RAG123 criteria to the success criteria.

Colleagues' reactions when you say you mark after every lesson.

Have to remember to mark after every lesson for it to be effective

Nothing

The students who don't mark work or RAG it.

Those who just go for A2 every time to save actually thinking. Part of the reason that we are going to try red/orange/yellow/green so you are above or below half way.

Having so many books around at school, because I am not tidy and when I lose 1 book (no doubt student put in wrong pile) I have to look for it as I know that they handed it in. (Student then says oh yeah I forgot I have it because I didn't hand it in - grrrrr)

Not sure yet

It doesn't encourage students to make subject specific comments

Needs to be done very regularly.

Sometimes hard to summarise effectively into RAG123 categories

effort grades, personally I don't use them. Grading effort is unreliable.

Not found one yet.

not too sure yet

We also have to give SWANS feedback at least every three weeks, so I have to do that as well as RAG123.

It is sometimes difficult to get the books marked before the next lesson but it's worth it

Some students really don't like it"

Marking the books everyday

Making sure you do it before you go home...

Not sure yet

Still struggle to find time to mark after every lesson - but I put that down to pastoral responsibilities - those pesky kids and parents stop me doing most things when I plan to!!!

If I set homework then I miss a lesson or two with some classes. Maybe I could think about giving separately homework books, but my experience with these has not been good.

The pressure I feel when I've had a bad week and fallen behind. Have five lessons out of six most days, and sometimes struggle to RAG everyday before the next lesson. This is because I've not got it right yet, but I get very stressed and then fall even more behind!!

Need to make more of a glance and RAG123 thing. Have started timing myself now!!!

Doesn't work if you can't keep up checking every lesson - I've fallen victim to this.

students overestimating their understanding

Probably feeling the pressure to check after every lesson, especially when there are after school commitments like parents evenings etc

I have found that it doesn't lend itself to every lesson.

I sometimes struggle to find the time for the students to do it properly so it ends up being rushed.

Daily expectation! We have probably gone a step too far with it! Setting individual questions, activities even card sorts etc. Viewed as an investment in next lesson rather than a quick response to previous. We are worried about sustainability though.

Sometimes harder to use in English where you're not always working on something as discrete as maths.

Can't think of any. It's easily the best thing I've done in my teaching career.

No real.negatives. some year 11 boys just sat A1 as it relates to breaking bad!

It doesn't record the volume of quality verbal feedback given in maths - but then neither do other written systems, the major issue with ofsted's version of marking and feedback monitoring.

Apathy of some to look at the benefits. Mainly, "you got this from twitter!" What do Ofsted think, well now I know.

I sometimes struggle to mark books every day. Especially on one week where I teach 4 days without a free period or lunchtime or after school.

So there you have it...

There is a bit more analysis to do, and I still need to sort out the top tips bit - there are some gems in there. However I wanted to get this post published this weekend...

Notably for me all of the worst bits are things people struggle with, not reasons to stop. Yes it can be difficult to do every day, yes it takes time for students to respond to it - we need to train them in how to use it and learn how to use it ourselves.

Still sceptical of RAG123? Give it a try!!!

Sunday, 13 April 2014

How do you do RAG123 so quickly?

Whenever people start off with RAG123 they take too long marking. It's not their fault - they're used to taking longer and struggle to do it more quickly. When I say a full set takes 15 minutes I am often greeted with incredulity. I thought I'd prove it... (video run time 3 mins - all the rest is explained...)

While the set of 26 books marked in the video took me 15 minutes 30 seconds you can see that I write an extra comment/response in almost all of them - for me this is the longest that a RAG123 set ever takes. Sometimes a full set can take less than 10 minutes if I'm not writing extra comments. As part of recording this video I actually filmed myself marking 3 full sets of books - that's 86 books and they were all reviewed in 38 minutes.

Not just about whizzing through books

I really want to emphasise though that RAG123 is actually a whole teaching approach, not just about blasting through a set of books in 10-15mins. The real strength comes from responding to what you see to shape how you approach your next lesson. It informs planning, it makes differentiation better, it helps you to get to know your students better.

Some people may say that "proper AFL in lesson is better than picking things up from reviewing books." To some extent I agree, but this gives an extra method of AFL, and one in which students have nowhere to hide.

Before RAG123 I thought I was quite good at AFL in lesson. I thought I had a good handle on what each student could and couldn't do, and what each student had actually done in lesson. When I started using RAG123 every lesson I found that I was wrong. I had a partial understanding at best, and RAG123 helps me to complete this picture. The insight it gives me helps me to meet the needs of my classes much more effectively than I ever have before. I now dislike planning a lesson until I've reviewed the output of the last one - otherwise it's too much of a guessing game.

Importantly RAG123 shouldn't replace any AFL, or other in class strategy. It also shouldn't be the only form of feedback the students receive.

As always - comments are welcome, please let me know your thoughts...

(In case of trouble streaming the video you can download a copy here http://bit.ly/RAG123video)

While the set of 26 books marked in the video took me 15 minutes 30 seconds you can see that I write an extra comment/response in almost all of them - for me this is the longest that a RAG123 set ever takes. Sometimes a full set can take less than 10 minutes if I'm not writing extra comments. As part of recording this video I actually filmed myself marking 3 full sets of books - that's 86 books and they were all reviewed in 38 minutes.

Not just about whizzing through books

I really want to emphasise though that RAG123 is actually a whole teaching approach, not just about blasting through a set of books in 10-15mins. The real strength comes from responding to what you see to shape how you approach your next lesson. It informs planning, it makes differentiation better, it helps you to get to know your students better.

Some people may say that "proper AFL in lesson is better than picking things up from reviewing books." To some extent I agree, but this gives an extra method of AFL, and one in which students have nowhere to hide.

Before RAG123 I thought I was quite good at AFL in lesson. I thought I had a good handle on what each student could and couldn't do, and what each student had actually done in lesson. When I started using RAG123 every lesson I found that I was wrong. I had a partial understanding at best, and RAG123 helps me to complete this picture. The insight it gives me helps me to meet the needs of my classes much more effectively than I ever have before. I now dislike planning a lesson until I've reviewed the output of the last one - otherwise it's too much of a guessing game.

Importantly RAG123 shouldn't replace any AFL, or other in class strategy. It also shouldn't be the only form of feedback the students receive.

As always - comments are welcome, please let me know your thoughts...

Subscribe to:

Posts (Atom)